Introduction

Like other prominent touch aware phone platforms, Microsoft’s Windows Phone “Mango” offers a rich framework for capturing touch and multi-touch activity on Windows Phone devices.

In this article, we will learn the underlying APIs that developers need to work with to leverage the true power of the Windows Phone platform.

Touch Support

The touch support in Windows Phone is exposed to Windows Phone developers through the FrameReported event in the Touch class, which resides in System.Windows.Input namespace. The Touch class resides in System.Windows.dll, which is one of the default libraries included in all Windows Phone applications. The FrameReported event gets raised for any touch activity across the currently running application.

Each frame raised represents a series of touch messages/points, which can be retrieved by calling GetTouchPoints API or calling the member methods on the TouchFrameEventArgs instance in the FrameReported event.

The FrameReported event is generated in the following cases:

- When a finger makes screen contact

- When a finger slides on a screen

- When a finger is released from the screen

When a series of touches are made, you can get the first touch points by calling GetPrimaryTouchPoint on the TouchFrameEventArgs instance raised in the FrameReported event.

Hands-On

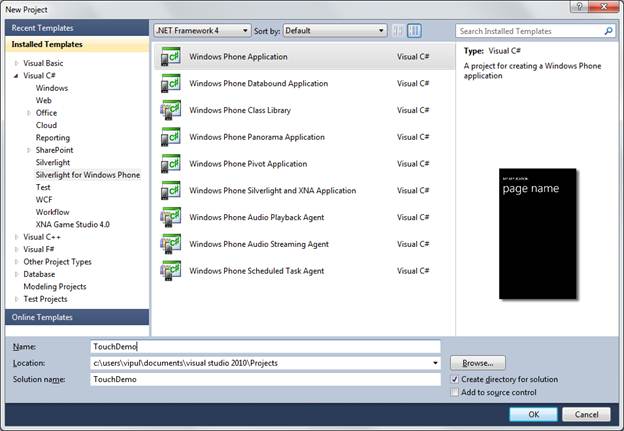

Start Visual Studio and create a new Windows Phone project titled “TouchDemo”.

Create a new project in Visual Studio

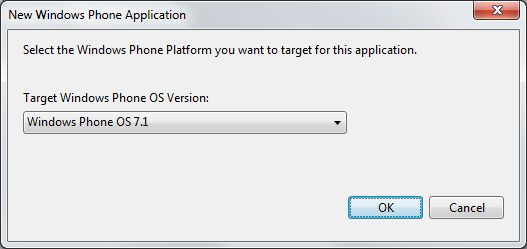

When prompted for Windows Phone OS version, select “Windows Phone OS 7.1”.

Select Windows Phone OS 7.1

Let’s add two TextBlocks, one with text “TouchPoint” and the other blank. The second TextBlock will indicate the dimension of the touch event.

Now add an event handler for processing any FrameReported events. We’ll call our emulator “MyAppFrameReported”. In this event handler, we will get the Primary touch point and if the touch action is down or move, we will show it in the textblock we created above.

void MyAppFrameReported(object sender, TouchFrameEventArgs e) { TouchPoint tp = e.GetPrimaryTouchPoint(this); while (tp.Action != TouchAction.Up) { textBlockTouchPoint.Text = tp.Position.X.ToString() + "," + tp.Position.X.ToString(); } }

Next, we need to wire our event handler. We will add a hook to the event when we navigate to the page.

protected override void OnNavigatedTo(System.Windows.Navigation.NavigationEventArgs e) { base.OnNavigatedTo(e); Touch.FrameReported += MyAppFrameReported; }

We also want to make sure that we don’t process the eventhandler multiple times. To do that, when we navigate away from the page, we want to de-register the event handler. We do that in the OnNavigatedFrom eventhandler.

protected override void OnNavigatedFrom(System.Windows.Navigation.NavigationEventArgs e) { base.OnNavigatedFrom(e); Touch.FrameReported -= MyAppFrameReported; }

Now, our application is ready to handle touch events and show the location of the touches. Note that the Visual Studio Emulator does not raise the FrameReported event since it required touch sensitive hardware. To test this application, you need to deploy the application to a phone to test it.

When you run the application, you will notice that on every touch, the TouchPoint location is updated. That is because, for every FrameReported event fired, we look at the position and report it.

Summary

I hope you have found the information useful. Please be very careful to detach FrameReported handlers as soon as possible. Unintended consequences can occur if an application navigates away from the page.

About the author

Vipul Patel is a Program Manager currently working at Amazon Corporation. He has formerly worked at Microsoft in the Lync team and in the .NET team (in the Base Class libraries and the Debugging and Profiling team). He can be reached at vipul_d_patel@hotmail.com