Introduction

By using Optical Character Recognition (OCR), you can detect and extract handwritten and printed text present in an image. The API works with different surfaces and backgrounds. To use OCR as a service, you have to get a subscription key from the Microsoft Cognitive Service portal. Steps of registration and obtaining the API keys are mentioned in my previous article, “Analyzing Image Content Programmatically: Using the Microsoft Cognitive Vision API.”

In this post, I will demonstrate handwriting and text recognition by uploading a locally stored image consuming the Cognitive API.

Embedded Text Recognition Inside an Image

Step 1

Create a new ASP.NET Web application in Visual Studio. Add a new ASPX page named TextRecognition.aspx. Then, replace the HTML code of TextRecognition.aspx with the following code.

In the following ASPX code, I have created an ASP panel. Inside that panel, I have added an Image, TextBox, a file upload control, and Submit button. Locally browsed images with handwritten or printed text will be displayed in the image control. A textbox will display text present in the image after the Submit button is clicked.

<%@ Page Language="C#" AutoEventWireup="true" CodeBehind="TextRecognition.aspx.cs" Inherits="TextRecognition.TextRecognition" %> <!DOCTYPE html> <html > <head runat="server"> <title>OCR Recognition</title> </head> <body> <form id="myform" runat="server"> <div> </div> <asp:Panel ID="ImagePanel" runat="server" Height="375px"> <asp:Image ID="MyImage" runat="server" Height="342px" Width="370px" /> <asp:TextBox ID="MyTestBox" runat="server" Height="337px" Width="348px"></asp:TextBox> <br /> <input id="MyFileUpload" type="file" runat="server" /> <input id="btnSubmit" runat ="server" type="submit" value="Submit" onclick="btnSubmit_Click" /> <br /> </asp:Panel> </form> </body> </html>

Step 2

Add the following namespaces in your TextRecognition.aspx.cs code file.

using System; using System.Collections.Generic; using System.IO; using System.Linq; using System.Web; using System.Web.UI; using System.Web.UI.WebControls; using System.Net.Http; using System.Net.Http.Headers; using System.Diagnostics;

Step 3

Add the following app setting entries in your Web.config file. I have used an existing subscription key generated long back; you have to add your own subscription key registered from Azure Portal. The APIuri parameter key value is the endpoint of the ODR cognitive service.

<appSettings>

<add key="RequestParameters"

value="language=unk&detectOrientation =true"/>

<add key="APIuri"

value="https://westcentralus.api.cognitive.microsoft.com/

vision/v1.0/ocr?"/>

<add key="Subscription-Key"

value="ce765f110a1e4a1c8eb5d2928a765c61"/>

<add key ="Contenttypes" value="application/json"/>

</appSettings>

Step 4

Now, add the following code in your TextRecognition.aspx.cs codebehind file. All static functions will return appSettings key values, as mentioned in Step 3. The BtnSubmit_Click event will occur once the Submit button is clicked. It will call the CallAPIforOCR async function. By using the GetByteArray function, the local image will be converted to bytes and that would be passed to Cognitive Services for recognition.

public partial class TextRecognition : System.Web.UI.Page

{

static string responsetext;

static string responsehandwritting;

static string Subscriptionkey()

{

return System.Configuration.ConfigurationManager

.AppSettings["Subscription-Key"];

}

static string RequestParameters()

{

return System.Configuration.ConfigurationManager

.AppSettings["RequestParameters"];

}

static string ReadURI()

{

return System.Configuration.ConfigurationManager

.AppSettings["APIuri"];

}

static string Contenttypes()

{

return System.Configuration.ConfigurationManager

.AppSettings["Contenttypes"];

}

protected void btnSubmit_Click(object sender, EventArgs e)

{

string fileName = System.IO.Path.GetFileName

(MyFileUpload.PostedFile.FileName);

MyFileUpload.PostedFile.SaveAs(Server.MapPath

("~/images/" + fileName));

MyImage.ImageUrl = "~/images/" + fileName;

CallAPIforOCR("~/images/" + fileName);

MyTestBox.Text = responsetext;

}

static byte[] GetByteArray(string LocalimageFilePath)

{

FileStream ImagefileStream = new

FileStream(LocalimageFilePath, FileMode.Open,

FileAccess.Read);

BinaryReader ImagebinaryReader = new

BinaryReader(ImagefileStream);

return ImagebinaryReader.ReadBytes((int)

ImagefileStream.Length);

}

// Optical Character Reader

static async void CallAPIforOCR(string LocalimageFilePath)

{

var ComputerVisionAPIclient = new HttpClient();

try {

ComputerVisionAPIclient.DefaultRequestHeaders

.Add("Ocp-Apim-Subscription- Key",

Subscriptionkey());

string requestParameters = RequestParameters();

string APIuri = ReadURI() + requestParameters;

HttpResponseMessage myresponse;

byte[] byteData = GetByteArray(LocalimageFilePath);

var content = new ByteArrayContent(byteData);

content.Headers.ContentType = new

MediaTypeHeaderValue(Contenttypes());

myresponse = await

ComputerVisionAPIclient.PostAsync

(APIuri, content);

myresponse.EnsureSuccessStatusCode();

responsetext = await myresponse.Content

.ReadAsStringAsync();

}

catch (Exception e)

{

EventLog.WriteEntry("Text Recognition Error",

e.Message + "Trace" + e.StackTrace,

EventLogEntryType.Error, 121, short.MaxValue);

}

}

Step 5

Now, set TextRecognition.aspx as the default page and execute the Web application. After the page is displayed, click the browser button and open an local image with printed text on it. Click the Submit button to see the output.

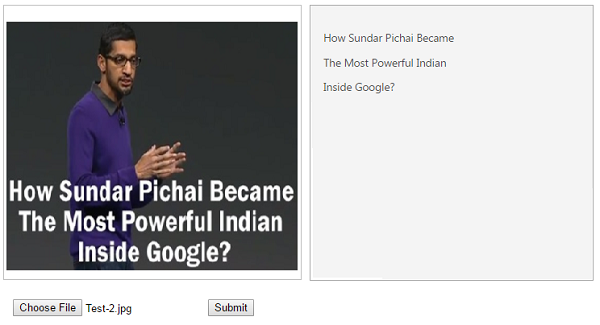

To show the demo, I have used the following image downloaded from the Internet. Successful execution of the Cognitive Services API call returns OCR, including text, a bounding box for regions, lines, and words. On the right side of the panel, you can see the embedded test displayed in the text box.

Figure 1: Output of OCR recognition

Following is the JSON response from Cognitive Services.

{

"TextAngle": 0.0,

"Orientation": "NotDetected",

"Language": "en",

"Regions": [

{

"BoundingBox": "21,246,550,132",

"Lines": [

{

"BoundingBox": "21,246,550,33",

"Words": [

{

"BoundingBox": "21,247,85,32",

"Text": "How"

},

{

"BoundingBox": "118,246,140,33",

"Text": "Sundar"

},

{

"BoundingBox": "273,246,121,33",

"Text": "Pichai"

},

{

"BoundingBox": "410,247,161,32",

"Text": "Became"

}

]

},

{

"BoundingBox": "39,292,509,33",

"Words": [

{

"BoundingBox": "39,293,72,32",

"Text": "The"

},

{

"BoundingBox": "126,293,99,32",

"Text": "Most"

},

{

"BoundingBox": "240,292,172,33",

"Text": "Powerful"

},

{

"BoundingBox": "428,292,120,33",

"Text": "Indian"

}

]

},

{

"BoundingBox": "155,338,294,40",

"Words": [

{

"BoundingBox": "155,338,118,33",

"Text": "Inside"

},

{

"BoundingBox": "287,338,162,40",

"Text": "Google?"

}

]

}

]

}

]

}

Recognize Handwriting

For handwriting recognition from text present in an image, I have used the same application, but you have to change the APIuri path to point to the correct endpoint and update the RequestParameters key value added in the previous step.

<appSettings>

<add key="RequestParameters" value="handwriting=true"/>

<add key="APIuri"

value="https://westcentralus.api.cognitive

.microsoft.com/vision/v1.0/recognizeText?"/>

<add key="Subscription-Key"

value="ce765f110a1e4a1c8eb5d2928a765c61"/>

<add key ="Contenttypes" value="application/json"/>

</appSettings>

Also, add the following ReadHandwritttingFromImage async method. This function will replace the CallAPIforOCR function call present in the btnSubmit_Click event.

static async void ReadHandwritttingFromImage(string LocalimageFilePath)

{

HttpResponseMessage myresponse = null;

IEnumerable<string> myresponseValues = null;

string operationLocation = null;

var ComputerVisionAPIclient = new HttpClient();

ComputerVisionAPIclient.DefaultRequestHeaders.Add

("Ocp-Apim-Subscription-Key", Subscriptionkey());

string requestParameters = RequestParameters();

string APIuri = ReadURI() + requestParameters;

byte[] byteData = GetByteArray(LocalimageFilePath);

var content = new ByteArrayContent(byteData);

content.Headers.ContentType = new

MediaTypeHeaderValue(Contenttypes());

try

{

myresponse = await ComputerVisionAPIclient

.PostAsync(APIuri, content);

myresponseValues = myresponse.Headers

.GetValues("Operation-Location");

}

catch (Exception e)

{

EventLog.WriteEntry("Handwritting Recognition Error",

e.Message + "Trace" + e.StackTrace,

EventLogEntryType.Error, 121, short.MaxValue);

}

foreach (var value in myresponseValues)

{

operationLocation = value;

break;

}

try

{

myresponse = await ComputerVisionAPIclient

.GetAsync(operationLocation);

responsehandwritting = await myresponse.Content

.ReadAsStringAsync();

}

catch (Exception e)

{

EventLog.WriteEntry("Handwritting Recognition Error",

e.Message + "Trace" + e.StackTrace,

EventLogEntryType.Error, 121, short.MaxValue);

}

}

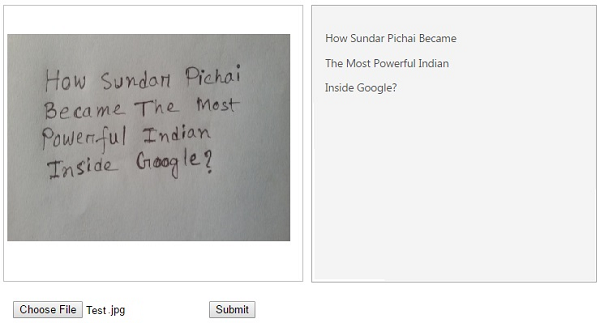

Now, execute the Web application again. After the page is displayed, click the browser button and open an local image with handwritten text on it. Click the Submit button to see the output.

Figure 2: Output of handwriting recognition

Conclusion

I hope this article has provided you enough information and code samples to help you quickly get started with OCR and handwriting recognition. Keep exploring Coduguru articles.